Introduction to the OWASP Top 10 (at a former employer in May 2023)

This is an edited transcript of a talk I gave at GoodHuman about the OWASP Top 10. The talk is available in my quick-presentations repository.

The transcript was created OpenAI's Whisper API, then edited by myself and Claude2 using a similar strategy to Simon Willison. Screenshots were created using shot-scraper.

EDIT 2024-02-26: GoodHuman owes me pay from June/July 2023, so I've updated these slides to remove their brand colours. I've also updated the link above that previously went to GoodHuman to go somewhere else.

Good morning. Today I'm going to cover the OWASP Top 10. If you're not across the OWASP Top 10, that's fine. This is just a super high-level introduction. I'm going to run through each of the 10 items on the list and then talk about some public exploits for each one.

So what is the OWASP Top 10? It's a regularly updated list of the most critical security risks to web applications.

It's produced by the Open Worldwide Application Security Project.

That's a group of about 50,000 security professionals who have joined as members. And you can be a member. It only costs $50 to join for a year, which helps support the projects that they do.

They get together for in-person events where they talk about this stuff. But they also have a really huge online community of security-focused web developers and security professionals.

Here's the top 10. I'm just going to dive into this.

But first I want to just caveat it all a little bit. For each of these items in the top 10, you could probably spend an entire day doing a workshop on them. And I'm going to try to spend two minutes on each one. So that's the depth that we're going to get to here.

I'm hoping to give you the exposure to what they are so that you can start to think about these things and how they apply within our application and the code that you're writing.

Let's jump in.

Number one on the list is broken access control, which is any situation where a user can access something that's outside of their intended set of permissions. We see that happen all the time. It's number one on the list because it's incredibly common and it can have a really high impact. There's a high risk of data exposure when you allow users to access things that they're not supposed to.

We see it happening through things like manipulating URLs, changing the contents of cookies, changing the contents of JWTs or how they're signed, and just generally manipulating things to do with an HTTP request that we're making to a service.

We also see missing access control on unexpected HTTP methods. This is a specific type of web vulnerability where, say, you've got the access control on the GET request, but then someone makes a PATCH request and that circumvents the access control and still returns the object.

Things like that can be really common in web frameworks where you're relying on the framework to do a lot of that access control and checking.

This is an example of what you might see in the network tab of Google Chrome or Firefox. If I ever see this URL, there's a 100% chance that I'm going to change that final 2 to a 3 and just see what happens.

And in the case of Optus, what might happen is you return the full customer record for that next customer ID, regardless of who you're authenticated as.

For each of these, I'm going to try to give you a brief example of how this has gone catastrophically wrong for somebody. And in the case of Optus, they had a public API endpoint to get customer information that took a customer ID and didn't require any authentication. Someone found that, just enumerated through all of the sequential customer IDs, and saved that data.

Number two is cryptographic failures.

Effectively, just bad cryptography that leads to unexpected data exposure, which is a pretty broad statement. And it's actually a really big category of different types of vulnerabilities.

It encompasses things like weak TLS configurations that allow a person-in-the-middle attack. So that's having bad ciphers or having a weakly generated SSL certificate.

Sticking with SSL, it also covers the HSTS header. If you're not across what the HSTS header does, it sets an amount of time that once a browser has accessed your website over HTTPS, it should never allow HTTP connections. If you don't set it up properly, you can suffer from downgrade attacks where an attacker will send the user to the HTTP version of your website and intercept that traffic.

Another huge one here is the use of default passwords and secrets in the application. I'll share the slides later, but these four links down here are just to GitHub repos full of default passwords for things like routers and MongoDB instances and fresh installs of PHP apps and stuff like that. There are so many data breaches that happen because people just credential stuff with the default password for applications that are publicly available on the internet and then dump everything out of it.

It also covers the use of weak algorithms and keys with low entropy. So that's using bad password hashing algorithms.

You should just use Bcrypt or Argon2 if you need to hash a password or do what we do and rely on Firebase, Auth0 or someone that gets paid a huge amount of money to get that stuff right.

Accidental key exposure falls into this as well. With accidental key exposure, my example is from March of this year where GitHub accidentally included their RSA private key in a public GitHub repo.

Heaps of people seem to have had access to that key, which is a bit scary considering that that's all you would have needed to intercept all SSH traffic to GitHub or to enable interception of SSH traffic to GitHub.

One big thing that they got called out for was the sentence "and out of an abundance of caution," they replaced the RSA key. They leaked the RSA key publicly. It's not an abundance of caution. The only behaviour that you can do after that happens is rotating the key.

Everyone web developer's favourite: Injection.

Injection is a situation where user supplied data is directly injected into your code. You're allowing a user to change the behaviour of your application when they submit data.

Obviously SQL injection is something that we're all probably pretty aware of, but it's not just SQL that you have to care about here. Things like LDAP, NoSQL, if you're piping commands to a system command line to generate a PDF or something like that, that can also be vulnerable to injection and ORMs are not immune either. There are plenty of CVEs and pretty critical ones as well for ORM exploits that involve injection.

The example is the Bobby Tables XKCD comic. It's about a mom that happened to name her son, Robert, quote, close bracket, colon drop table students, colon dash dash. And the school had a heap of problems with that name and yeah, that's the XKCD comic.

Insecure design is next on the list. And it covers design and architectural flaws in your system.

It's one that was introduced in 2021 because they want teams thinking more about the idea of secure by design, doing activities like threat modelling to understand the risks of changes that you're introducing and improving the security posture through training and through better design of the application that you're working on.

Pretty much all of these things are design flaws, even something as simple as a SQL injection, right? It's like you've designed that API endpoint to allow the user to do that. And you can pick those things up at the design phase through better practices around security, but also through understanding the risks that you're potentially introducing.

Huge, huge example here from November last year is LastPass. I link at the end to all of the examples and the LastPass security incident section on Wikipedia is probably longer than the rest of the article combined. It's huge.

The issue that they had and what I would definitely classify as bad design in 2022 is someone got in and downloaded a whole bunch of backups. Backups of customer password vaults. And you would think, that's not the end of the world. They're encrypted with my master password or with my password that I've supplied to sort of add into the encryption key...

The design flaw that they had was that only the passwords in the vault were encrypted. So now the attackers have a massive list of username / email address and account and where you have accounts based on what they were able to get out of those backups.

And then there was a secondary design flaw which makes things even worse, which is effectively that if you hadn't changed your master password in the last few years, then it had only gone through a thousand rounds of hashing with their hashing algorithm, which meant that they were quite easy to crack.

Next on the list, security misconfiguration, which is just like applications that are improperly secured because of a misconfiguration.

Classic one in the web development world is like MongoDB that's open to the internet and it can grab everything out of it.

This is also things where you've missed steps in like your go-live list or the hardening documentation of a piece of software that you're adding into your infrastructure.

This is also things where you've missed steps in like your go-live list or the hardening documentation of a piece of software that you're adding into your infrastructure.

And also situations where you might accidentally deploy to production with development configs. The example I used a couple of years ago when I did a presentation about this was like Twitter deploying with source maps and the full unmodified source into production and things like that. Not something that you necessarily want do when you're a company that size.

And also situations where you might accidentally deploy to production with development configs. The example I used a couple of years ago when I did a presentation about this was like Twitter deploying with source maps and the full unmodified source into production and things like that. Not something that you necessarily want do when you're a company that size.

Another potential example here is features that aren't being used, features that are enabled that aren't being used that increase the attack surface. So that's enabling things that are still a work in progress or haven't been properly secured.

That kind of goes into security by design and baking in security from day zero to make sure that when you enable something or if something gets accidentally enabled, you're not increasing the attack surface.

An example here, and this has hit companies here in Australia, is this article from Krebs about how many public Salesforce sites are leaking private data.

Effectively, there's a default configuration with public Salesforce sites that if you allow anonymous users to write data, then those anonymous users are able to read data as well, including data submitted by other anonymous users. So if you spin up a little site to just do a quiz or get some feedback from people or something like that, the anonymous users are able to just change the URL and see all of their responses.

That's like the default configuration and this has hit banks and healthcare providers and government agencies who just pay consultants a heap of money to deploy a Salesforce site for something and then it doesn't get secured properly.

Vulnerable and outdated components.

So applications using components with known security vulnerabilities, you probably have all seen lthe of Dependabot and things like that before to detect vulnerabilities that are out of date and in particular detect vulnerabilities that have known security issues, which you need to update.

This one will cover both accidental vulnerabilities and malicious changes. So in the case of open source projects, I suppose generally developers aren't intending to introduce vulnerabilities, so it's an accidental vulnerability, but malicious changes definitely do happen.

When a malicious change is specifically targeting a set of users, that's become known as a supply chain attack where someone is specifically targeting you by modifying a dependency in your supply chain. And that's something that we've seen a lot of in the last three or four years.

The example here is the node IPC library. In March 2022 after the Russian invasion of Ukraine, the developer updated that library to just delete files if you happen to install it from within Russia. We might support that action but it's something that you don't want to accidentally run in your build pipeline or on a build server or in your production environment where someone's package that they've updated deletes a bunch of files off of your computer.

Identification and authentication failures which is allowing the wrong user to authenticate to a system.

And that might be a malicious user or it might just be a user who has logged into one system and gets access to another system unintentionally. This covers things like account enumeration, which is something that I've seen a bunch of in the past with companies that I've worked for where attackers have just tried to log in with a whole bunch of leaked credentials.

Account enumeration becomes a big deal for companies when you allow customers to have weak and previously leaked passwords. So being able to check against or being able to check password entropy when you're setting or changing the password is one way to combat this, but also checking against public databases of leaked passwords is a great action that we can do.

This one also covers misconfiguration of authentication. So that's things like being able to bypass MFA. So if you've got a username and password for a customer, but the customer has MFA, hopefully that stops the attacker from being able to log in, but there are vulnerabilities where you can figure out MFA bypasses. That's sort of one where you'd be joining multiple attacks together, but it's something that we do see in the wild. That's what happened to Uber when they had one of their employees get hacked and they bypassed MFA to get access to their network and then found PowerShell scripts full of tokens that had access to customer data.

OAuth scope creep. So that's where when someone logs in via OAuth and the attacker changes the scopes that are given. So you're logging in with a proper account, but you end up generating a token that has more privilege than you're expecting.

And then unverified user registrations, which is something that I'm sure we are handling quite well, but it's situations where a user is able to register a new account, but they're not supposed to be.

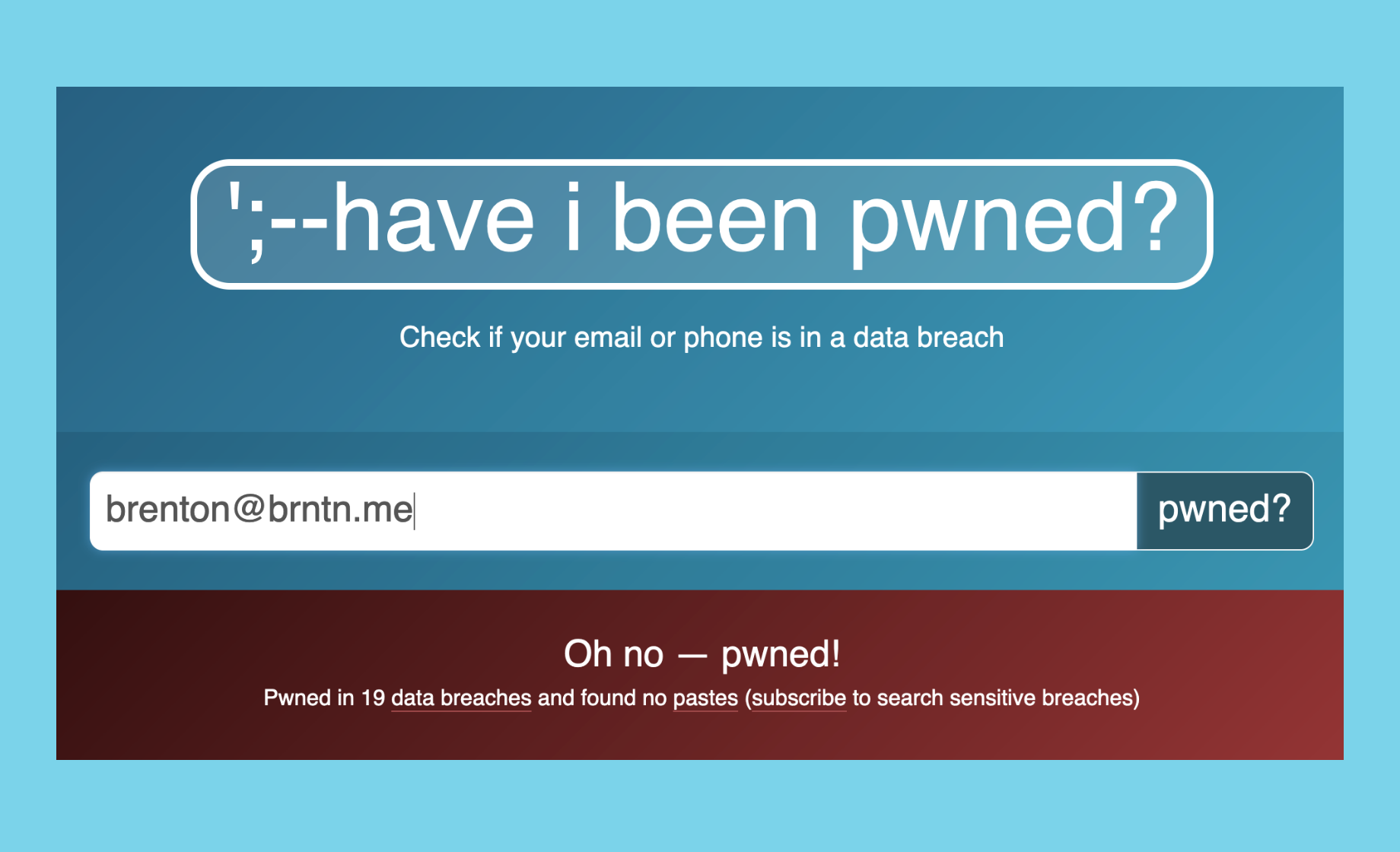

The example here is not really that much of an example, but if you haven't seen the Have I Been Pwned website, I linked to it at the end and I definitely recommend to go and have a look and enter your personal email address. For myself, my email address is in 19 data breaches on the site. Everyone's details are on the internet and we just have to deal with that.

Using MFA and unique passwords is the best way to combat data breaches like these.

Software and data integrity failures

So this was another one that was introduced in 2021 and it's about pipelines and assuming that the build that you just tested and built on your like build environment is actually what ends up being deployed.

This also covers using dependencies from third parties without verifying the contents of them. So when we find a great third party library to do something, do we actually go and have a look at the source code and make sure that it's not doing something stupid?

And then also this covers allowing untrusted code into like your ecosystem. So that's whether it's a dependency that you added or a new component that you're putting into your system. How much do you trust that piece of code?

I'm not going to go into too much detail here about the SolarWinds attack because it's pretty old now, but the SolarWinds hack was a hack by Russian government hackers really that attacked a whole bunch of US companies and government agencies by updating the firmware on a network device. And the network device wasn't properly checking the signature of the firmware before updating. And then they were just sniffing all the traffic. It's definitely one to go and read about - there are books written about this hack. It's a really great one to dive deep into.

Next up is security logging and monitoring failures.

So this one covers not having enough information to investigate an issue. So when a security incident does occur, do you have enough information to figure out what the attacker actually had access to, how they got in, what customer information was exposed and those sorts of things?

And then it also covers logging too much information. So if the attacker does get in and all they've got access to is your logs, but they're able to scrape your logs for customer information and passwords, that would be bad.

An example of this, probably more the former, but could also end up being the latest Latitude Pay hack earlier this year, where initially they came out and announced that they'd been attacked and they think about 15,000 customer records had been accessed. And then a week later, it was 300,000. And then two weeks after that, it was seven or 8 million people. And that's just an example of they didn't have the information at hand to understand the impact level of the incident. And that sort of really dictates how you handle an incident like that.

Lucky last on the list is server-side request forgery. It's kind of an odd one on the list here because it's very specific. And I think if you look at the OWASP Top 10 page, this one was added through a community survey. It is a really important vulnerability to understand.

SSRF is effectively allowing your server to make requests to a resource provided by a user. And you see that manifest itself in things like making requests to reveal metadata about the service.

A common attack is to make requests to the AWS metadata IP address from screenshot services. That would dump out a screenshot of the JSON file that includes the AWS tokens for that service and things like that.

You can use this to trick servers into mining cryptocurrencies and stuff like that. So if you've got a form that accepts a URL and you enter a URL that's got a cryptocurrency miner, maybe that will just sit there and do that forever.

The couple of examples here. So this URL, if anyone in the chat can tell me what that's actually gonna access, I'd love to know.

And this URL, if anyone knows what that one accesses, both perfectly valid URLs and both things that you'll see in a pen test, I think.

And the example here is this one from a hacker named Orange. I've linked to it at the end, but they basically took a pretty simple SSRF in GitHub Enterprise's handling of webhooks and turned that into a remote code exploit in Memcached within the GitHub Enterprise deployment. And got tens of thousands of dollars from the GitHub bug bounty for what was effectively just a really simple SSRF, what began as a really simple SSRF attack.

Well, I'm 54 seconds over, but here's my super quick wrap up.

The OWASP Top 10 is amazing. You should go and read it. It's highly, highly accessible. It's super straightforward to read through, but it's really only the first 10 of heaps of issues that we have to care about as web developers. So hopefully we can keep talking about this stuff more in the future.

The advice that I want to leave everyone with is really just to think about security as early as possible during the planning and design phase of the work that we're doing and make sure that it's something that we're considering. And that's it.

Resources if you want to learn more

Exposure links

- Optus breach details

- Github RSA key leak

- Exploits of a Mom

- Lastpass Security Incidents

- Salesforce public sites

- node-ipc updated to support Ukraine

- Have I Been Pwned?

- Solarwinds (non-paywalled link)

- Latitude breach just got much worse

- Github SSRF Exploit Chain